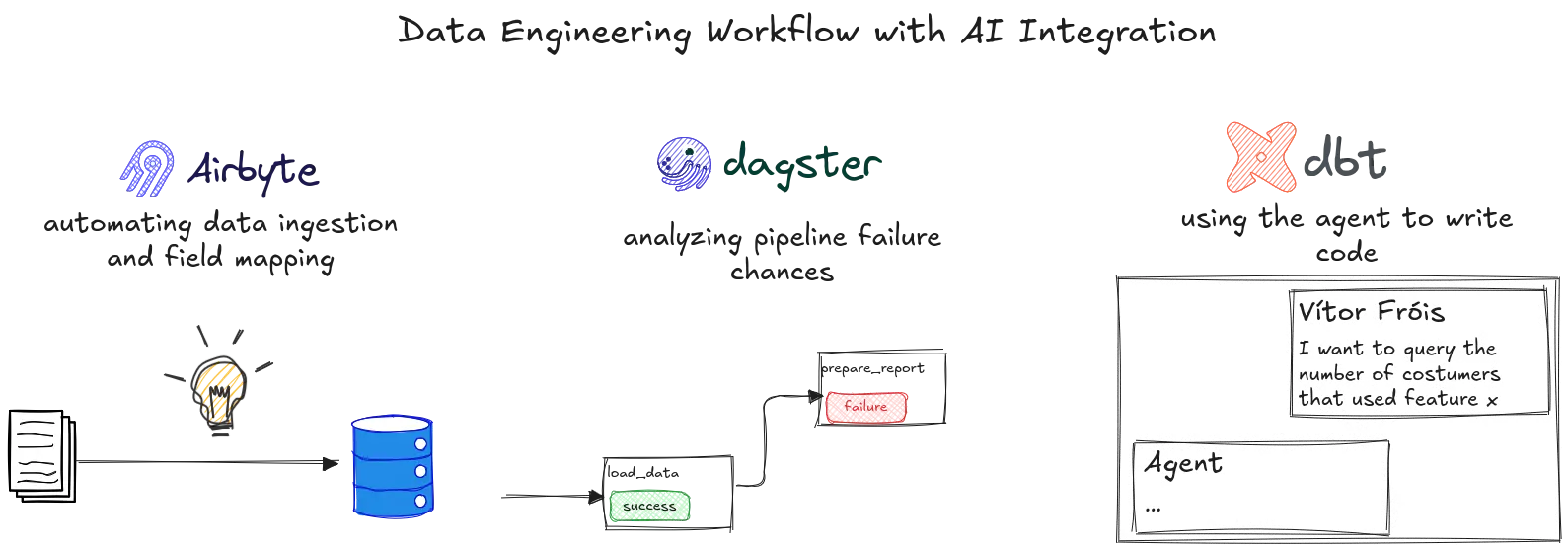

Data engineering often feels like a grind of repetitive tasks, fragile pipelines, and endless monitoring. The good news is that AI is starting to turn these pains into smoother, more automated workflows. Instead of replacing the heavy hitters—Airbyte, Dagster, and dbt—AI is weaving into their fabric to improve reliability, speed, and engineer productivity. Understanding these integrations reveals how data teams can work smarter without losing control.

AI Empowering Data Ingestion and Integration

Airbyte’s core strength is flexible, scalable data ingestion, but manual schema mapping and handling schema drift have historically slowed teams down. AI integration helps by automatically detecting schemas and mapping source fields to destinations without constant human intervention. This reduces onboarding friction when adding new data sources, which traditionally required painstaking manual setup.

Beyond schema detection, AI also continuously monitors for schema changes or anomalies, alerting engineers before a pipeline breaks. This proactive approach reduces downtime and avoids scrambling to fix broken jobs after the fact. By offloading these error-prone tasks to AI, data engineers can focus on designing meaningful data flows rather than babysitting connectors.

Smarter Orchestration and Workflow Management

Dagster, as a modern orchestration platform, benefits from AI in several key ways that enhance pipeline robustness and visibility. Predictive failure detection is a standout: AI models analyze historical runs and detect warning signs of potential failures, allowing teams to intervene proactively instead of reacting after outages.

AI also optimizes resource management by dynamically adjusting task parallelism and scheduling based on workload patterns and system health, improving cluster utilization without manual tuning. On the observability front, AI-powered alert triaging helps engineers cut through alert noise by highlighting critical failures and filtering out false positives. This means teams spend less time firefighting and more time iterating on data products.

AI-Assisted Data Transformation and Testing

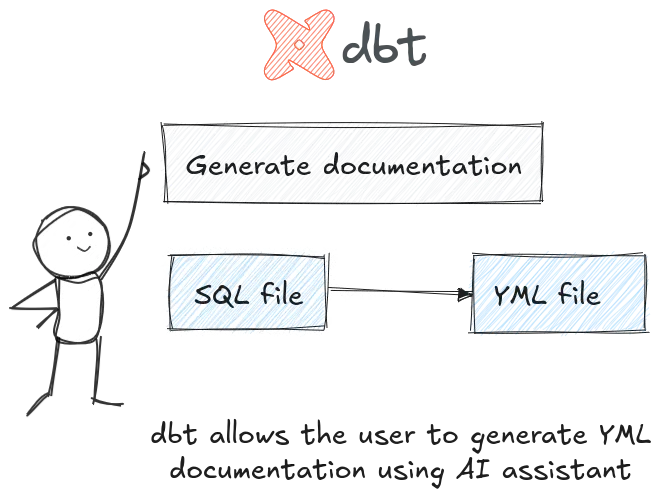

dbt has long been the go-to tool for SQL-based transformations, and its AI enhancements aim to speed up development while maintaining quality. AI-assisted code generation helps engineers by suggesting SQL snippets tailored to the project context, reducing boilerplate and speeding iteration cycles.

Testing and documentation also see AI support: smart recommendations for tests catch common anomalies before they propagate, and AI-generated documentation updates keep project knowledge fresh without extra manual work. However, the human engineer remains central to validate and tune these outputs, ensuring transformations meet business needs with precision.

Beyond the Pipeline: Metadata, Governance, and Collaboration

AI’s impact extends well beyond individual pipeline steps, especially in metadata management and data governance. Automatic tagging and lineage inference simplify understanding complex data landscapes, reducing the time needed to trace data origins and dependencies. By continuously monitoring data quality metrics using AI, teams can detect issues early and ensure compliance with organizational standards. This not only boosts confidence in data products but also smooths collaboration by making datasets more discoverable and trustworthy across business units.

Conclusion: AI is a Force Multiplier, Not a Replacement

The promise of AI in data engineering isn’t to automate humans out of the loop but to lift tedious work off their plates. Integrations with Airbyte, Dagster, and dbt show that AI can enhance reliability, speed, and developer experience without sacrificing control or flexibility. Embracing these advances means your data team can focus on strategic initiatives rather than firefighting, building a more resilient and efficient data stack. Create clean analysis and dashboards 10x faster. Try Briefer for free!