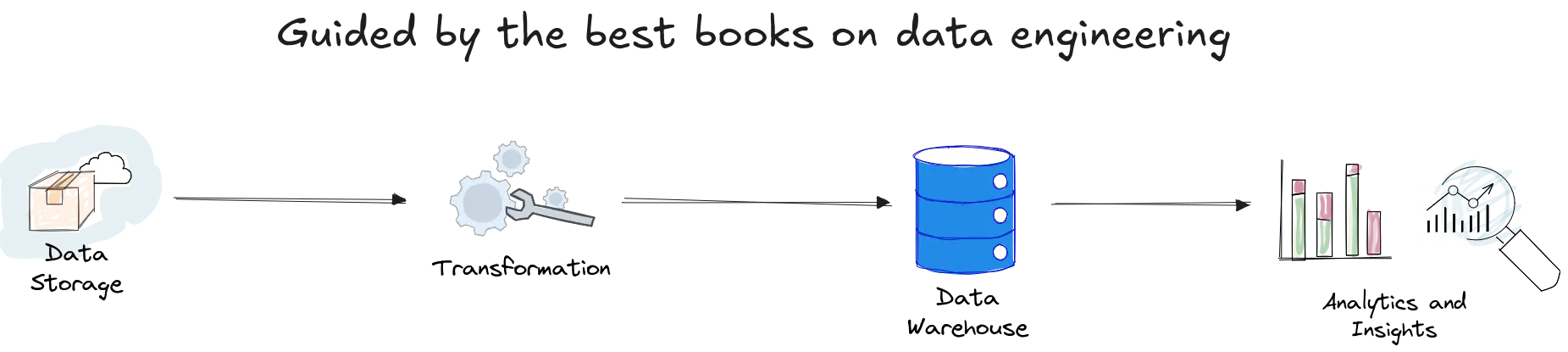

Building reliable data pipelines can feel like assembling furniture with missing pieces: frustrating, time-consuming, and prone to structural collapse. Many data engineers struggle with piecing together knowledge from scattered blogs and documentation. This guide compiles the best books on data engineering to provide structured, expert-backed resources for mastering the field.

Professionals across industries rely on these books to move beyond ad hoc solutions toward scalable, maintainable systems. Each title here offers distinct value, whether it’s grounding in core principles, practical tooling guidance, or ensuring data quality and governance.

Fundamentals of Data Engineering by Joe Reis and Matt Housley

Widely regarded as the modern reference for aspiring and practicing data engineers, Fundamentals of Data Engineering offers a comprehensive overview of the data engineering lifecycle. From data ingestion and storage to orchestration and observability, the book connects theoretical concepts to real-world systems with practical clarity.

Readers frequently highlight its value in demystifying the often overwhelming ecosystem of tools and frameworks. The authors provide guidance on when and why to use popular technologies such as Apache Kafka, Airflow, and dbt, avoiding the trap of recommending tools without context.

Although some newcomers might find the book dense, it’s particularly beneficial for those looking to build a strategic understanding of modern data architectures. By emphasizing trade-offs in design decisions, it equips readers to construct robust and scalable pipelines, rather than falling into the trap of over-engineering solutions.

Data Quality Fundamentals by Barr Moses, Lior Gavish and Molly Vorwerck

Bad data can silently erode the integrity of entire analytics systems. Data Quality Fundamentals addresses this critical challenge, making it a must-read among the best books on data engineering. Authored by industry veterans who specialize in data reliability, the book introduces frameworks for measuring and improving data quality.

Key concepts such as data SLAs, observability, and lineage are presented with actionable examples. The book helps shift the mindset of data engineers from merely moving data to ensuring its reliability, fostering greater trust in data-driven decision-making across organizations.

While it is particularly valuable for mid-level engineers and team leads responsible for maintaining production pipelines, the structured approaches to detecting and mitigating data issues make it relevant for anyone working in data engineering. The emphasis on proactive monitoring and root cause analysis aligns well with modern practices in data reliability engineering.

The Data Warehouse Toolkit by Margy Ross and Ralph Kimball

A cornerstone of data warehouse design, The Data Warehouse Toolkit continues to be essential reading despite the evolution of data platforms. Ralph Kimball’s methodologies, particularly dimensional modeling, have shaped how enterprises structure their analytical systems for decades.

The book meticulously details techniques such as star schemas, snowflake schemas, and handling slowly changing dimensions. While some may consider its approach traditional, these frameworks remain deeply embedded in modern cloud data warehouses and business intelligence tools.

For data engineers tasked with creating or maintaining analytics-friendly data models, Kimball’s work offers time-tested strategies to ensure consistency, performance, and scalability. Its systematic approach to data warehouse design ensures that even as technologies evolve, the underlying architectural principles remain sound and applicable.

DAMA-DMBOK: Data Management Body of Knowledge

The DAMA-DMBOK is the definitive reference for data management professionals. Comprehensive and methodical, it encompasses everything from data governance and architecture to metadata management and security, making it one of the best books on data engineering for understanding the broader context of data work.

Rather than a narrative-driven guide, this book serves as an extensive framework for establishing and maintaining data management practices. Organizations seeking to implement or refine their data governance policies will find it particularly useful, as it aligns with global standards and best practices.

Though not focused solely on engineering topics like pipelines or distributed systems, the DAMA-DMBOK provides foundational knowledge essential for roles involving data architecture, compliance, and enterprise data strategy. Its structured approach makes it indispensable for those aiming to mature their organization’s data capabilities beyond technical implementation alone.

Designing Data-Intensive Applications by Martin Kleppmann

A seminal work on distributed systems, Designing Data-Intensive Applications is frequently cited as one of the best books on data engineering. Martin Kleppmann’s writing excels at breaking down complex topics such as consistency models, distributed logs, and stream processing into accessible yet rigorous explanations.

The book is praised for its deep exploration of system design trade-offs, particularly regarding consistency, availability, and partition tolerance, key components of the CAP theorem. Through detailed examples and diagrams, it clarifies concepts like replication, partitioning, and event sourcing, which are critical for designing reliable, scalable data systems.

While it requires a solid technical foundation, this book is indispensable for senior engineers and architects responsible for creating high-throughput, resilient platforms. Kleppmann’s synthesis of academic theory with practical insights makes it a cornerstone text for anyone serious about mastering modern data architectures.

Conclusion

The best books on data engineering bridge the gap between improvised fixes and building systems that scale reliably under pressure. Covering everything from core methodologies and essential tooling to governance frameworks and distributed architectures, these titles equip data engineers with the skills needed at every career stage.

Studying these resources helps professionals design robust pipelines, improve data quality, and navigate the complexities of modern data ecosystems with confidence.

Ready to streamline your data workflows? Briefer makes it simple and fast to transform raw data into clean, actionable insights. Try for free now!