If you've ever battled with spreadsheets, you know the challenges: endless rows, confusing formulas, and the constant fear of crashing your computer. These frustrations are common among data scientists who rely on spreadsheets for data analysis. However, Python data science libraries offer robust tools that transform chaotic spreadsheets into streamlined, insightful workflows. Say goodbye to clunky interfaces and hello to Python's elegant simplicity.

Why Python Data Science Libraries Matter

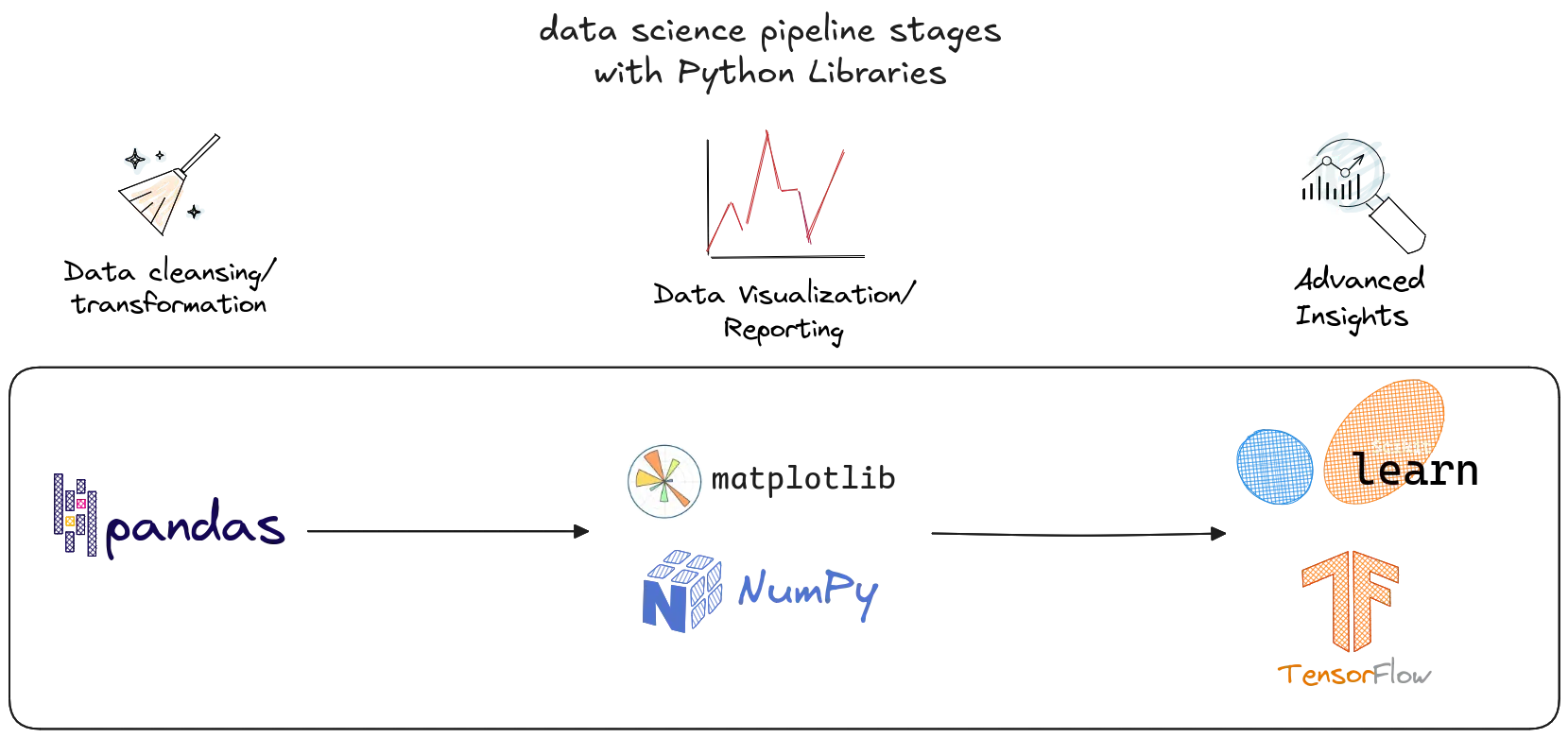

Handling large datasets and complex analyses requires more than spreadsheets. Libraries like NumPy, Pandas, and Matplotlib provide foundational support for numerical operations, data manipulation, and visualization, making data science more efficient and scalable.

Retail Sales Boost with Python Libraries

Imagine a retail team aiming to boost sales by better understanding customer purchase patterns. They start by using Pandas to clean and manipulate messy sales spreadsheets: filtering transactions, handling missing data, and grouping by customer segments with just a few lines of code. Next, NumPy helps them efficiently perform numerical calculations, like computing average purchase values and seasonal trends.

For visual insights, Matplotlib and Seaborn come into play, creating clear charts that highlight peak buying times and popular products. Meanwhile, Scikit-learn powers predictive models that forecast future sales based on historical data, helping the team plan inventory smartly.

import pandas as pd import numpy as np # Load dataset df = pd.read_csv("sales_data.csv") # Basic data manipulation df['Total'] = df['Quantity'] * df['Price'] # Summary stats with NumPy mean_sales = np.mean(df['Total']) print(f"Average sales: {mean_sales:.2f}")

To speed up certain data-heavy calculations, they use Numba to just-in-time compile Python functions, improving performance without rewriting code. And if the project requires automation, (e.g. scraping competitor pricing data) they rely on Selenium to control browsers and extract information automatically.

This combination of libraries allows the retail team to move from raw spreadsheet chaos to actionable insights swiftly, demonstrating why Python’s data science ecosystem is essential for real-world projects.

Pitfalls to Avoid When Choosing Libraries

Choosing the right Python libraries can be tricky. Python’s inherent speed limitations mean that for performance-critical tasks, relying solely on pure Python libraries might slow you down. That’s why libraries like Numba (which uses just-in-time compilation) or PySpark (which distributes computation) are crucial for scaling, but they also add complexity and require learning new paradigms.

Not considering the learning curve is a mistake. Some powerful libraries (e.g., FastAPI for web APIs or PyTorch for deep learning) require time investment, so evaluate whether the team’s skill set aligns with the library before adopting it. Balancing performance, simplicity, and maintainability is key when selecting Python data science tools.

The Essential Libraries

-

Core data manipulation & analysis:

- NumPy: Efficient numerical computing

- Pandas: Powerful data manipulation

- Matplotlib & Seaborn: Data visualization and statistical plots

-

Machine learning & AI:

- Scikit-learn: Classic ML algorithms

- PyTorch: Deep learning and neural networks

- Numba: Speeding up Python code via JIT compilation

-

Web & Automation tools:

- FastAPI: Modern web APIs

- Selenium: Browser automation

-

Data handling & NLP:

- SQLAlchemy: Database toolkit

- SpaCy: Advanced NLP

-

Big data & computer vision:

- PySpark: Scalable big data processing

- OpenCV: Computer vision tasks

FAQ

How do I set up a Python data science environment?

The easiest way is to install Python via Anaconda, which comes bundled with most essential libraries like NumPy, Pandas, and Matplotlib. For interactive coding and visualization, Jupyter Notebook is a popular choice. If you run into issues, resources like official documentation and Stack Overflow provide step-by-step troubleshooting guides.

Which Python data science libraries do users recommend the most?

According to user feedback, Pandas and Scikit-learn are highly praised for their ease of use and robust functionality. One data scientist remarked, “These libraries transformed our workflow and saved hours weekly.” Additionally, benchmark reports consistently rank NumPy as the top performer for numerical operations.

What are the future trends in Python data science libraries?

The integration of AI and machine learning is driving rapid advancements in Python libraries. Tools like PyTorch and FastAPI are expected to gain even more features and adoption. Staying current with these evolving libraries ensures you can leverage the latest innovations in your data science projects.

Streamline Collaboration and Turn Insights into Action

Managing tools and sharing insights across teams can be a significant challenge, often leading to inefficiencies and delays in decision-making. Without a streamlined process, teams can struggle to collaborate effectively, leading to disjointed workflows and a lack of transparency. This can result in missed opportunities and slowed project timelines. Try Briefer for free and turn your Python-powered insights into collaborative, interactive reports with ease.